Conditional entropy

In information theory, the conditional entropy (or equivocation) quantifies the remaining entropy (i.e. uncertainty) of a random variable  given that the value of another random variable

given that the value of another random variable  is known. It is referred to as the entropy of

is known. It is referred to as the entropy of  conditional on

conditional on  , and is written

, and is written  . Like other entropies, the conditional entropy is measured in bits, nats, or bans.

. Like other entropies, the conditional entropy is measured in bits, nats, or bans.

Contents |

Definition

More precisely, if  is the entropy of the variable

is the entropy of the variable  conditional on the variable

conditional on the variable  taking a certain value

taking a certain value  , then

, then  is the result of averaging

is the result of averaging  over all possible values

over all possible values  that

that  may take.

may take.

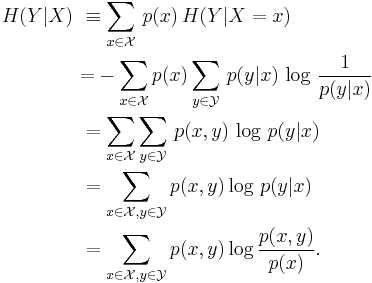

Given discrete random variable  with support

with support  and

and  with support

with support  , the conditional entropy of

, the conditional entropy of  given

given  is defined as:

is defined as:

The last formula above is the Kullback-Leibler divergence, also known as relative entropy. Relative entropy is always positive, and vanishes if and only if  . This is when knowing

. This is when knowing  tells us everything about

tells us everything about  .

.

Note: The supports of X and Y can be replaced by their domains if it is understood that  should be treated as being equal to zero.

should be treated as being equal to zero.

Chain rule

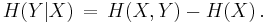

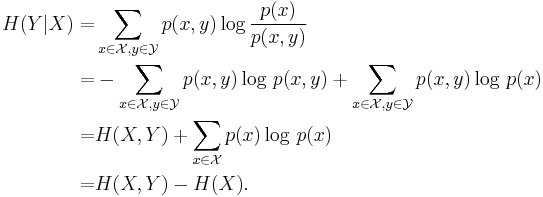

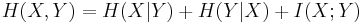

From this definition and the definition of conditional probability, the chain rule for conditional entropy is

This is true because

Intuition

Intuitively, the combined system contains  bits of information: we need

bits of information: we need  bits of information to reconstruct its exact state. If we learn the value of

bits of information to reconstruct its exact state. If we learn the value of  , we have gained

, we have gained  bits of information, and the system has

bits of information, and the system has  bits of uncertainty remaining.

bits of uncertainty remaining.

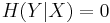

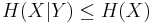

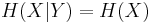

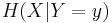

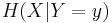

if and only if the value of

if and only if the value of  is completely determined by the value of

is completely determined by the value of  . Conversely,

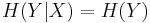

. Conversely,  if and only if

if and only if  and

and  are independent random variables.

are independent random variables.

Generalization to quantum theory

In quantum information theory, the conditional entropy is generalized to the conditional quantum entropy.

Other properties

For any  and

and  :

:

, where

, where  is the mutual information between

is the mutual information between  and

and  .

.

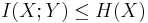

, where

, where  is the mutual information between

is the mutual information between  and

and  .

.

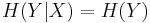

For independent  and

and  :

:

and

and

Although the specific-conditional entropy,  , can be either lesser or greater than

, can be either lesser or greater than  ,

,  can never exceed

can never exceed  when

when  is the uniform distribution.

is the uniform distribution.

References

- Theresa M. Korn; Korn, Granino Arthur. Mathematical Handbook for Scientists and Engineers: Definitions, Theorems, and Formulas for Reference and Review. New York: Dover Publications. pp. 613–614. ISBN 0-486-41147-8.

- C. Arndt (2001). Information Measures: Information and its description in Science and Engineering. Berlin: Springer. pp. 370–373. ISBN 3-540-41633-1.